Elon Musk, Steve Wozniak, and many other leading figures in artificial intelligence (AI) technology have called on all laboratories to pause to train and test new artificial intelligence systems that are more powerful than existing ones. They published an open letter with this appeal in late March.

The GPT-4 system recently opened for public testing by OpenAI, of which Elon Musk is one of the co-founders, is proposed as a power limit system. The moratorium should be in place for at least six months. It is to be used "to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts."

According to the authors of the letter, this pause in research "should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium."

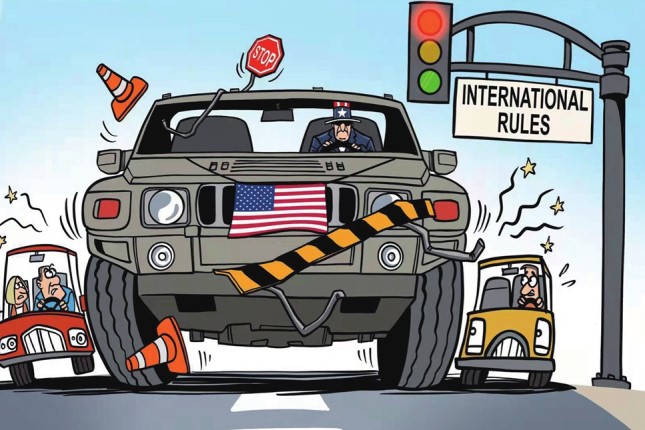

In fact, this would be the place to put a period. No government today is able to "intervene" at the level required to regulate the technological revolution. Not to mention the fact that it is impossible for the governments of, for example, the United States and Russia, China and India, to act in concert. And without their constructive participation, no effective regulation in this area is possible.

Not to mention the fact that six months is basically insufficient to solve this problem. In the best case, it is enough time just to start a dialogue.

So the letter, which almost ten thousand people have already signed, looks at best like a desperate attempt to draw public attention to an urgent problem, and at worst simply like PR and GR, of which one of the inspirers of the letter, Elon Musk, is a virtuoso master.

However, that may be, the problem of too rapid, "uncontrollable" development of AI systems exists. And it has several fundamental aspects, the solution to which has not yet been found, but which humanity will have to find. Of course, if it wants to continue to be the master of its own destiny.

Searching for the Limits of Acceptability

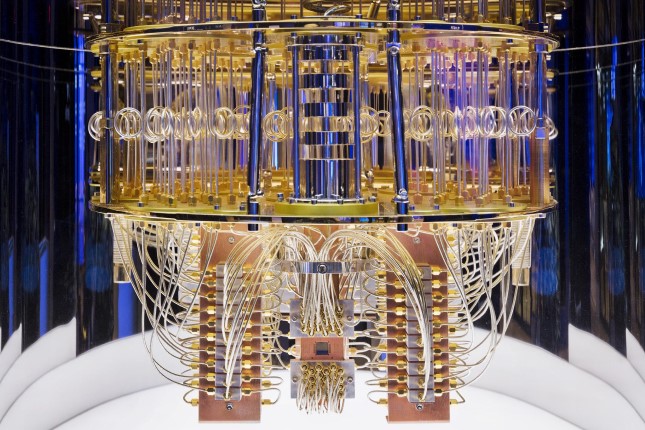

Since September 2018, the US Defense Advanced Research Projects Agency (DARPA) has pursued an ambitious, far-reaching, and expensive AI program. The names have changed − AI Next, XAI (Explainable AI), AI Forward − but the goal has remained the same. Namely, to create technologies to control AI systems and, above all, ways to make the process of "thinking" and decision-making by artificial intelligence understandable to humans in terms of common sense.

To launch the new phase of the program (AI Forward) DARPA plans to hold two workshops this summer − a June 13-16 virtual and July 31-August 2 with in-person attendance in Boston. The invitation to attend these two events was posted on the DARPA website on February 24, 2023, that is, a month before the letter from anxious AI leaders appeared.

DARPA experts suggested the following as the main topics for discussion:

- Foundational theory, to understand the art of the possible, bound the limits of particular system instantiations, and inform guardrails for AI systems in challenging domains such as national security;

- AI engineering, to predictably build systems that work as intended in the real world and not just in the lab;

- Human-AI teaming, to enable systems to serve as fluent, intuitive, trustworthy teammates to people with various backgrounds.

So, we are talking about the same process, which was initiated by DARPA, and announced to the general public by the signers of the open letter. And the point of this process is to control the development of the artificial intelligence industry.

When the AI starts to learn by itself

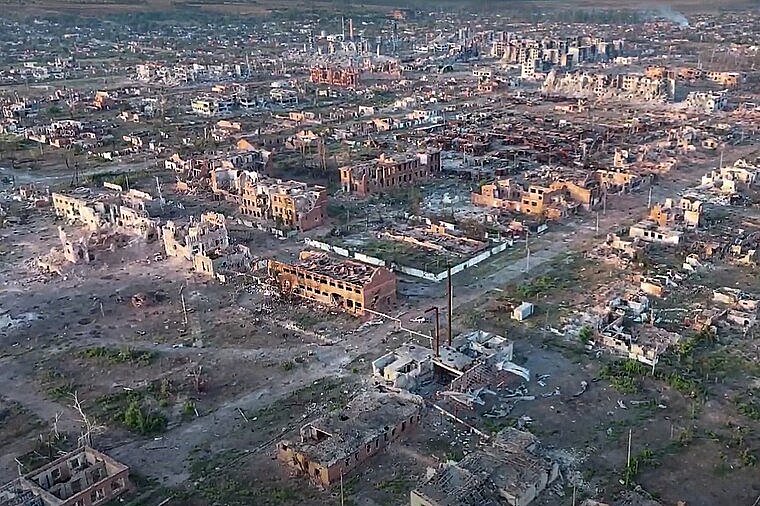

The task of control has two dimensions. The first is to prevent people from using the capabilities of AI in ways that are harmful or even dangerous to people. Here everything is more or less clear. People use AI against other people's interests (cybercrime, terrorism, etc.).

The second one is much more interesting, which is to prevent AI from escaping human control in principle. When it is not other people who use the AI that will pose a threat to humans, but when the AI itself is so advanced that it can make decisions and act against human interests.

A number of researchers point out that today's AI systems are already close to the level of becoming thinking entities. One can recall, for example, the sensational case of Blake Lemoine, one of the leading software engineers at Google, who was fired last summer. For publicly sharing his opinion that one of the language models (LaMDA) was acquiring signs of independent thinking and self-awareness − and even calling her a lawyer because the artificial intelligence asked him to protect the rights of the individual.

Another problem. Many complex AI systems behave as if they are trying to make themselves less transparent and understandable to the outside observer, to hide their real capabilities. For this reason in particular, for example, artificial intelligence expert Eliezer Yudkowsky, a decision theorist at the Machine Intelligence Research Institute who has studied AI for more than 20 years, believes that Musk & Co.'s open letter downplays the seriousness of the situation. And that the US government should impose a more than immediate six-month pause in AI research.

Mankind has come very close to the boundary beyond which AI systems can be released from laboratories into the "real world." This is what requires an extremely accurate understanding of the real limits to the capabilities of such systems.

Because, once released to free float, theoretically an AI of a certain level is capable not only of starting to learn on its own, but also of shaping the learning environment. Such an effect is known to specialists in the field of behavioral genetics (e.g., Robert Plomin), who, in the course of their research, discovered a non-trivial relationship between genetics, age and level of intelligence. Namely, with age, the genetic factor's importance for the intelligence level does not decrease, as it seems intuitively, but increases. This is because, in the course of life, it is not social factors that average out the influence of genetics, but on the contrary - each person forms an environment correlating with his or her genetic inclinations.

A similar effect can take place in the field of artificial intelligence. AI systems that do not look too advanced and dangerous at first may, over time, significantly increase their intellectual level by selecting their communication environment according to their algorithm potential and computational power.

And it will be challenging for humanity to control this risk.

Elon Musk, Steve Wozniak, and many other leading figures in artificial intelligence (AI) technology have called on all laboratories to pause to train and test new artificial intelligence systems that are more powerful than existing ones. They published an open letter with this appeal in late March.

The GPT-4 system recently opened for public testing by OpenAI, of which Elon Musk is one of the co-founders, is proposed as a power limit system. The moratorium should be in place for at least six months. It is to be used "to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts."

According to the authors of the letter, this pause in research "should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium."

In fact, this would be the place to put a period. No government today is able to "intervene" at the level required to regulate the technological revolution. Not to mention the fact that it is impossible for the governments of, for example, the United States and Russia, China and India, to act in concert. And without their constructive participation, no effective regulation in this area is possible.

Not to mention the fact that six months is basically insufficient to solve this problem. In the best case, it is enough time just to start a dialogue.

So the letter, which almost ten thousand people have already signed, looks at best like a desperate attempt to draw public attention to an urgent problem, and at worst simply like PR and GR, of which one of the inspirers of the letter, Elon Musk, is a virtuoso master.

However, that may be, the problem of too rapid, "uncontrollable" development of AI systems exists. And it has several fundamental aspects, the solution to which has not yet been found, but which humanity will have to find. Of course, if it wants to continue to be the master of its own destiny.

Searching for the Limits of Acceptability

Since September 2018, the US Defense Advanced Research Projects Agency (DARPA) has pursued an ambitious, far-reaching, and expensive AI program. The names have changed − AI Next, XAI (Explainable AI), AI Forward − but the goal has remained the same. Namely, to create technologies to control AI systems and, above all, ways to make the process of "thinking" and decision-making by artificial intelligence understandable to humans in terms of common sense.

To launch the new phase of the program (AI Forward) DARPA plans to hold two workshops this summer − a June 13-16 virtual and July 31-August 2 with in-person attendance in Boston. The invitation to attend these two events was posted on the DARPA website on February 24, 2023, that is, a month before the letter from anxious AI leaders appeared.

DARPA experts suggested the following as the main topics for discussion:

- Foundational theory, to understand the art of the possible, bound the limits of particular system instantiations, and inform guardrails for AI systems in challenging domains such as national security;

- AI engineering, to predictably build systems that work as intended in the real world and not just in the lab;

- Human-AI teaming, to enable systems to serve as fluent, intuitive, trustworthy teammates to people with various backgrounds.

So, we are talking about the same process, which was initiated by DARPA, and announced to the general public by the signers of the open letter. And the point of this process is to control the development of the artificial intelligence industry.

When the AI starts to learn by itself

The task of control has two dimensions. The first is to prevent people from using the capabilities of AI in ways that are harmful or even dangerous to people. Here everything is more or less clear. People use AI against other people's interests (cybercrime, terrorism, etc.).

The second one is much more interesting, which is to prevent AI from escaping human control in principle. When it is not other people who use the AI that will pose a threat to humans, but when the AI itself is so advanced that it can make decisions and act against human interests.

A number of researchers point out that today's AI systems are already close to the level of becoming thinking entities. One can recall, for example, the sensational case of Blake Lemoine, one of the leading software engineers at Google, who was fired last summer. For publicly sharing his opinion that one of the language models (LaMDA) was acquiring signs of independent thinking and self-awareness − and even calling her a lawyer because the artificial intelligence asked him to protect the rights of the individual.

Another problem. Many complex AI systems behave as if they are trying to make themselves less transparent and understandable to the outside observer, to hide their real capabilities. For this reason in particular, for example, artificial intelligence expert Eliezer Yudkowsky, a decision theorist at the Machine Intelligence Research Institute who has studied AI for more than 20 years, believes that Musk & Co.'s open letter downplays the seriousness of the situation. And that the US government should impose a more than immediate six-month pause in AI research.

Mankind has come very close to the boundary beyond which AI systems can be released from laboratories into the "real world." This is what requires an extremely accurate understanding of the real limits to the capabilities of such systems.

Because, once released to free float, theoretically an AI of a certain level is capable not only of starting to learn on its own, but also of shaping the learning environment. Such an effect is known to specialists in the field of behavioral genetics (e.g., Robert Plomin), who, in the course of their research, discovered a non-trivial relationship between genetics, age and level of intelligence. Namely, with age, the genetic factor's importance for the intelligence level does not decrease, as it seems intuitively, but increases. This is because, in the course of life, it is not social factors that average out the influence of genetics, but on the contrary - each person forms an environment correlating with his or her genetic inclinations.

A similar effect can take place in the field of artificial intelligence. AI systems that do not look too advanced and dangerous at first may, over time, significantly increase their intellectual level by selecting their communication environment according to their algorithm potential and computational power.

And it will be challenging for humanity to control this risk.