Well, at the risk of sounding like President Richard Nixon, let me just say: I am not a bot. I do understand your concern, though. Artificial intelligence systems are spreading everywhere.

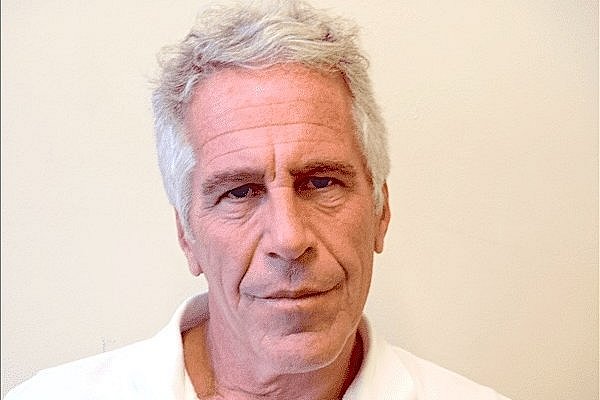

Six years ago, Russian President Vladimir Putin said bluntly that whoever "becomes the leader" in the field of artificial intelligence "will be the ruler of the world." It is a prediction that remains terrifying. From Putin's point of view, nuclear arsenals, aircraft carriers, and even the "soft power" of global reserve currencies will become increasingly insignificant when parried with the power of AI.

Putting aside the philosophical question of whether advances in AI research are approaching the threshold of creating sentient "life," it is helpful to think of these systems as performing complex sets of computations at rapid speed. Computer code is essentially a list of rules instructing a computer how to operate: for example, if this happens, then execute this sequence of steps. These algorithms give computers basic problem-solving abilities that mimic human intelligence.

No matter how sophisticated the computer, though, its "thinking" is still limited to the set of instructions forming its code. When a computer executes commands, it is acting within the discrete specifications that human programmers have already provided.

Now imagine a computer executing the following command: if this happens, then execute the best available option. This type of complexity requires a computer to know all the available responses to a given problem, to employ a method for evaluating those responses' relative effectiveness, and to choose a course of action. Still, even extremely advanced computers are limited by the information inputs made available and the rules of logic that have been written to govern the computer's "thinking." True AI breaks through these limitations.

One way to think of AI is to envision a computer that is capable of spontaneously writing new code — or rules — for its own operation. That might be akin to some kind of remedial "consciousness." Now imagine that a computer with this rule-writing ability is also capable of filtering through unlimited collections of information and making unlimited evaluations of that information in the process of choosing the best available option. Whereas normal human intelligence might consider several possibilities in response to a given problem, an AI system would eventually be capable of evaluating an exponentially large number of possibilities while executing "decisions" faster as processing capabilities increase. An AI system's "thinking" will continue to advance, constrained only by the limits of its human-designed hardware and the laws of thermodynamics. Should AI "intelligence" transform those limits into mere speed bumps, then, theoretically, an AI system will continue to "evolve" as it approaches an infinite number of calculations within an infinitesimal amount of time.

That kind of "intelligence" would be so far beyond a human's understanding that a sufficiently advanced AI system would no longer be able to explain to its old programmers the complex calculations underlying its "reasoning." Barring human-imposed programming controls or some kind of doomsday switch, AI's vastly superior "intelligence" would give it a capacity for dominion over this world.

From this perspective, it is easy to appreciate Putin's contention that the master of AI "will be the ruler of the world." It might make more sense to wonder whether AI will eventually be the master of AI and the future "ruler of the world."

Incipient forms of artificial intelligence are already transforming the economy. Investors use algorithmic "quants" (Quantitative Trading) to identify and execute market trades more quickly than human brokers. Proto-AI "bots" have taken over nearly half the Internet, and almost a third of human users can no longer distinguish whether they are interacting with a live person or a machine. Shoppers transact with basic AI systems when making purchases both online and in self-service checkout lines in many physical stores. AI-controlled robots are replacing blue-collar workers in once well-paying industry and manufacturing jobs. It is reasonable to wonder whether Adam Smith's "invisible hand" of the marketplace will someday soon be replaced with the "incomprehensible hand" of artificial intelligence — in which raw materials are gathered, manufactured items are produced, and goods are bought and sold not because there has been a "meeting of the minds" between humans but rather because there has been a "meeting of the codes" between AI systems.

As artificial intelligence advances in the number of computations and "decisions" it is capable of making in shorter periods of time, three stages will likely occur:

(1) AI will increase human productivity in whatever field it is deployed.

(2) AI will make "decisions" that are not immediately obvious to human handlers but prove correct.

(3) AI will make "decisions" that seem inexplicable to human handlers who do not possess a sufficient level of intelligence to judge the "correctness" of the AI's reasoning.

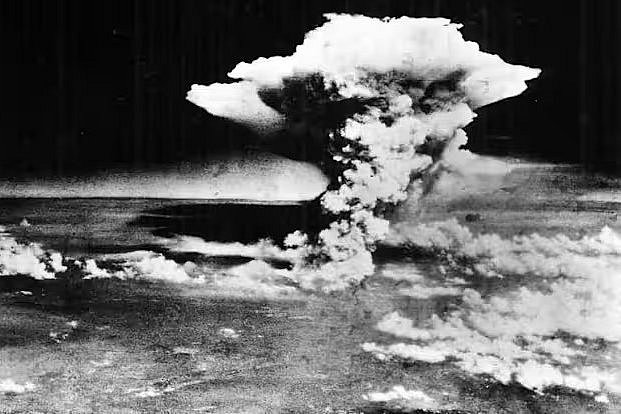

Science fiction writers have been warning about the third stage for decades. Should AI become sufficiently advanced, as well as sufficiently untethered from human control, then the likelihood increases that an AI system would make a "decision" that — from a human's point of view — is categorically immoral and unacceptable. The unsettling question haunting the field of artificial intelligence is whether a non-human AI would have any qualms about being inhumane.

Within the field of warfare, artificial intelligence will revolutionize battle tactics at each one of these stages. In the first stage, AI is a troop multiplier. By rapidly filtering through information and computing the best available option at any point during a battle, AI will perform tasks that before required the efforts of hundreds of different specialized operatives and warriors.

By the second stage, AI will make battlefield decisions too fast for human operators to timely check. The AI system's method for evaluating various options may well be grounded in its human-designed programming, but its real-time "decisions" will have lethal consequences.

The third stage is when things become evermore dangerous. A sufficiently advanced AI that rapidly analyzes information, computes the best available option, and executes "decisions" may act in ways that are not only inexplicable to human intelligence but also downright horrifying. Crossing moral "red lines" might seem perfectly reasonable to an AI system strictly committed to accomplishing mission objectives. Would an AI system commit "crimes against humanity" if it concluded that its actions would save humanity from a much worse fate? Such moral complexity will get only more troublesome.

What quietly began decades ago and will only accelerate over the next few years is a brand new arms race between competing nation states seeking to build the most sophisticated AI system. Expecting an ever-evolving AI "intelligence" to remain constrained by its programmers' national interests, though, may prove hopelessly naïve.

In Douglas Adams's popular science fiction series, The Hitchhiker's Guide to the Galaxy, a supercomputer calculates that the meaning of "life, the universe, and everything" is forty-two. That answer is funny because it makes no sense. As AI advances well past the limits of human comprehension, it may well compute answers and make "decisions" that are equally as befuddling. The repercussions for humanity, however, may not be nearly so humorous.

If artificial intelligence develops to the point where human cognition is to an AI system what an ant's cognition is to man's, then why should anyone expect human notions of morality to constrain a future AI's actions? After all, no-one would consult an ant with a moral dilemma. That conundrum should weigh heavily on us all as nation states escalate their AI research programs and the world bears witness to this newest arms race.

PS: I can already hear that reader's voice: "Yeah, yeah, that's just what a 'bot' would say."

Source: American Thinker.